一、系统环境 1.1 环境准备

角色

IP

服务

k8s-master01

192.168.66.31

etcd、containerd、kube-apiserver、kube-scheduler、kube-controller-manager、kubele、kube-proxy

k8s-node01

192.168.66.41

etcd、containerd、kubele、kube-proxy

k8s-node02

192.168.66.42

etcd、containerd、kubele、kube-proxy

1.2 环境初始化 1.2.1 综合完整设置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 systemctl stop firewallddisable firewalld's/enforcing/disabled/' /etc/selinux/config 's/.*swap.*/#&/' /etc/fstabcat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

1.2.2 分布设置 关闭防火墙:

1 2 3 4 5 6 7 # 关闭防火墙 # 关闭selinux $ sed -i 's/enforcing/disabled/' /etc/selinux/config $ setenforce 0

关闭swap:

1 2 $ swapoff -a $ sed -ri 's/.*swap.*/#&/' /etc/fstab

将桥接的IPv4流量传递到iptables的链:

1 2 3 4 5 6 7 8 9 10 11 12 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

时间同步:

1 2 3 4 yum install ntpdate -yclock -w

安装ipvs:

1 yum install ipset ipvsadm -y

1.2.3 host设置 1 2 3 4 5 6 7 8 9 10 11 12 hostnamectl set-hostname k8s-master01 hostnamectl set-hostname k8s-node01 hostnamectl set-hostname k8s-node02 cat >> /etc /hosts << EOF 192 .168 .66 .31 k8s-master01 192 .168 .66 .41 k8s-node01 192 .168 .66 .42 k8s-node02 EOF

1.2.4 文件夹初始化 准备文件夹

1 mkdir -p /usr/ local/k8s-install

环境文件夹

1 2 mkdir -p /opt/ kubernetes/{bin,ssl,cfg,logs}/opt/ etcd/{bin,ssl,cfg,data,wal}

二、软件下载 containerd安装

1 wget -c https://gi thub.com/containerd/ containerd/releases/ download/v1.6.5/ cri-containerd-cni-1.6 .5 -linux-amd64.tar.gz

etcd安装

1 wget -c https://gi thub.com/etcd-io/ etcd/releases/ download/v3.5.0/ etcd-v3.5.0 -linux-amd64.tar.gz

k8s二进制包下载

1 wget -c https:// dl.k8s.io/v1.24.0/ kubernetes-server-linux-amd64.tar.gz

三、生成证书 PKI 证书和要求 | Kubernetes

3.1 CA证书生成 CA是证书的签发机构,它是公钥基础设施(Public Key Infrastructure,PKI)的核心。CA是负责签发证书、认证证书、管理已颁发证书的机关。

(1) CA 证书签名请求(CSR)对应JSON

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 $ cat > ca-csr .json <<END "CN" : "kubernetes" ,"key" : { "algo" : "rsa" , "size" : 2048 "names" :[{ "C" : "CN" , "ST" : "Beijing" ,"L" : "Beijing" , "O" : "k8s" , "OU" : "System" END

(2)生成 CA 秘钥文件(ca-key.pem)和证书文件(ca.pem)

1 $ cfssl gencert -initca ca -csr.json | cfssljson -bare ca

生成文件:

ca-key.pem 秘钥文件

ca.pem 证书文件

ca.csr 证书签名请求文件

(3)创建请求证书的json文件

作用:用作证书签发的配置文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ cat > ca-config .json <<END "signing" : {"default" : {"expiry" : "8760h" "profiles" : {"kubernetes" : {"usages" : ["signing" ,"key encipherment" ,"server auth" ,"client auth" "expiry" : "8760h" END

3.2 apiserver (1) 创建证书签名请求(CSR)对应JSON

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 cat > server -csr.json << END 2048 END

(2)签发证书

说明:通过CA的 证书文件和密钥文件、证书签发配置、证书申请文件 来为PI 服务器生成秘钥和证书签发

1 cfssl gencert -ca =ca.pem -ca-key =ca-key.pem --config =ca-config.json -profile =kubernetes server-csr.json | cfssljson -bare server

生成文件:

server-key.pem 秘钥文件

server.pem 证书文件

server.csr 证书签名请求文件

(3)证书放置指定地方

1 cp {ca .pem,server.pem,server-key.pem} /opt /kubernetes/ssl

3.2 kube-proxy (1) 创建证书签名请求(CSR)对应JSON

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 cat > kube-proxy-csr.json << END 2048 END

2 签发证书

1 cfssl gencert -ca =ca.pem -ca-key =ca-key.pem --config =ca-config.json -profile =kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

3 迁移

1 cp {kube-proxy .pem,kube-proxy -key.pem} /opt/kubernetes/ssl

3.3 etcd (1)使用自签 CA 签发 Etcd HTTPS 证书

创建证书申请文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > etcd-csr.json<< EOF "CN" :"etcd" ,"hosts" :["192.168.66.31" ,"192.168.66.41" ,"192.168.66.42" "key" :{"algo" :"rsa" ,"size" :2048 "names" :["C" :"CN" ,"L" :"BeiJing" ,"ST" :"BeiJing" ,"O" : "k8s" ,"OU" : "System" EOF

生成证书: 为 API 服务器生成秘钥和证书,默认会分别存储为etcd-key.pem 和 etcd.pem 两个文件。

1 cfssl gencert -ca =ca.pem -ca-key =ca-key.pem -config =ca-config.json -profile =kubernetes etcd-csr.json | cfssljson -bare etcd

为etcd放置证书

1 cp {ca.pem,etcd-key.pem,etcd.pem} /opt/ etcd/ssl/

3.4 admin 一般默认我们在root用户下执行的kubectl的操作需要用到权限配置。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 cat > admin -csr.json <<EOF2048

生成证书:

1 cfssl gencert -ca =ca.pem -ca-key =ca-key.pem -config =ca-config.json -profile =kubernetes admin-csr.json | cfssljson -bare admin

3.5 kube-scheduler 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 "CN" : "system:kube-scheduler" ,"hosts" : [],"key" : {"algo" : "rsa" ,"size" : 2048"names" : ["C" : "CN" ,"L" : "BeiJing" ,"ST" : "BeiJing" ,"O" : "system:masters" ,"OU" : "System" -ca =ca.pem -ca-key =ca-key.pem -config =ca-config.json -profile =kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

3.6 kube-controller-manager 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > kube-controller-manager-csr.json << EOF"CN" : "system:kube-controller-manager" ,"hosts" : [],"key" : {"algo" : "rsa" ,"size" : 2048"names" : ["C" : "CN" ,"L" : "BeiJing" , "ST" : "BeiJing" ,"O" : "system:masters" ,"OU" : "System" -ca =ca.pem -ca-key =ca-key.pem -config =ca-config.json -profile =kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

四、k8s 权限配置config 4.1 admin.config 1 2 3 mkdir /root/.kube= "/root/.kube/config" = "https://192.168.66.31:6443"

设置集群参数:

1 2 3 4 5 kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

设置客户端认证参数:

1 2 3 4 5 kubectl config set-credentials cluster-admin \--client-certificate=./admin.pem \--client-key=./admin-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}

设置上下文参数:

1 2 3 4 kubectl config set-context default \--cluster=kubernetes \--user=cluster-admin \--kubeconfig=${KUBE_CONFIG}

设置默认上下文:

1 kubectl config use-context default --kubeconfig =${KUBE_CONFIG}

4.2 kube-scheduler.config kube-scheduler权限配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 KUBE_CONFIG ="/opt/kubernetes/cfg/kube-scheduler.kubeconfig" KUBE_APISERVER ="https://192.168.66.31:6443" kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-scheduler \--client-certificate=/opt/kubernetes/ssl/kube-scheduler.pem \--client-key=/opt/kubernetes/ssl/kube-scheduler-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG} kubectl config set-context default \--cluster=kubernetes \--user=kube-scheduler \--kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

4.3 kube-controller-manager.config kube-controller-manager权限配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 KUBE_CONFIG ="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig" KUBE_APISERVER ="https://192.168.66.31:6443" kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-controller-manager \--client-certificate=./kube-controller-manager.pem \--client-key=./kube-controller-manager-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG} kubectl config set-context default \--cluster=kubernetes \--user=kube-controller-manager \--kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

4.4 kube-proxy.config kube-proxy权限配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 KUBE_CONFIG ="/opt/kubernetes/cfg/kube-proxy.kubeconfig" KUBE_APISERVER ="https://192.168.66.31:6443" kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-proxy \--client-certificate=./kube-proxy.pem \--client-key=./kube-proxy-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG} kubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

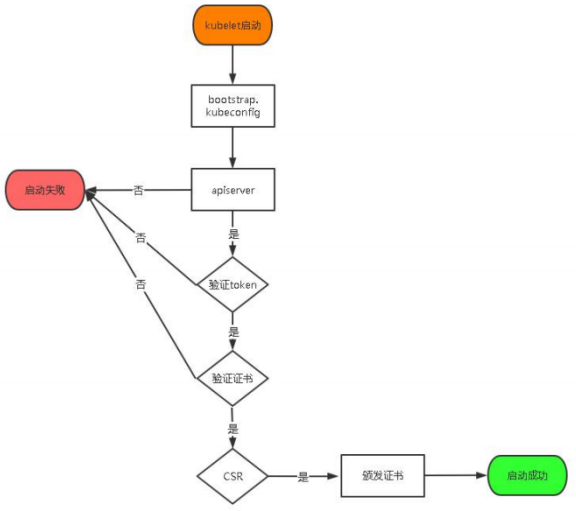

4.5 bootstrap.config 用于kublet TLS bootstraping 机制来自动颁发客户端证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 KUBE_CONFIG ="/opt/kubernetes/cfg/bootstrap.kubeconfig" KUBE_APISERVER ="https://192.168.66.31:6443" TOKEN ="2883dba522d43d742dd88f3ce07cf52e" kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG} kubectl config set-credentials "kubelet-bootstrap" \--token=${TOKEN} \--kubeconfig=${KUBE_CONFIG} kubectl config set-context default \--cluster=kubernetes \--user="kubelet-bootstrap" \--kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

4.6 Bootstrapping token.csv TLS Bootstrapping

启用 TLS Bootstrapping 机制 TLS Bootstraping:Master apiserver 启用 TLS 认证后,Node 节点 kubelet 和 kube- proxy 要与 kube-apiserver 进行通信,必须使用 CA 签发的有效证书才可以,当 Node 节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet 会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。

TLS bootstraping 工作流程:

授权kubelet-bootstrap用户允许请求证书

1 2 3 kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole =system:node-bootstrapper \--user =kubelet-bootstrap

创建上述配置文件中 token 文件:

1 2 3 cat > token.csv << EOF 2883dba522d43d742dd88f3ce07cf52e,kubelet-bootstrap,10001,system:node-bootstrapper EOF

格式:token,用户名,UID,用户组

token 也可自行生成替换:

1 head -c 16 /dev/urandom | od -An -t x | tr -d ' '

四、部署containerd 1 2 3 $ wget -c https://gi thub.com/containerd/ containerd/releases/ download/v1.6.5/ cri-containerd-cni-1.6 .5 -linux-amd64.tar.gz1.6 .5 -linux-amd64.tar.gz -C /

1 2 3 4 # 查看文件系统df -Th type xfs_info [filesystem_name] | grep ftype

五、部署etcd 5.1 文件结构准备 1 2 3 4 5 6 7 8 $ mkdir /opt/ etcd/opt/ etcd/{bin,cfg,ssl,data,wal} –p//gi thub.com/etcd-io/ etcd/releases/ download/v3.5.0/ etcd-v3.5.0 -linux-amd64.tar.gz5.0 -linux-amd64.tar.gz -C etcd-v3.5.0 5.0 /{etcd,etcdctl,etcdutl} / opt/etcd/ bin//etc/ profile

5.2 证书准备 1 mv {ca.pem , etcd-key.pem , etcd.pem} /opt/ etcd/ssl

5.3 配置文件 /opt/etcd/cfg/etcd.yaml 配置文件:etcd/etcd.conf.yml.sample at main · etcd-io/etcd · GitHub

192.168.66.31

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 name: "etcd-1" data-dir: "/opt/etcd/data" wal-dir: "/opt/etcd/wal" listen-peer-urls: https://192.168.66.31:2380 listen-client-urls: https://192.168.66.31:2379 initial-advertise-peer-urls: https://192.168.66.31:2380 advertise-client-urls: https://192.168.66.31:2379 initial-cluster: 'etcd-1=https://192.168.66.31:2380,etcd-2=https://192.168.66.41:2380,etcd-3=https://192.168.66.42:2380' initial-cluster-token: 'etcd-cluster' initial-cluster-state: 'new' client-transport-security: cert-file: /opt/etcd/ssl/etcd.pem key-file: /opt/etcd/ssl/etcd-key.pem trusted-ca-file: /opt/etcd/ssl/ca.pem peer-transport-security: cert-file: /opt/etcd/ssl/etcd.pem key-file: /opt/etcd/ssl/etcd-key.pem trusted-ca-file: /opt/etcd/ssl/ca.pem

192.168.66.41

1 2 3 4 5 6 7 8 name: "etcd-2" listen-peer-urls: https://192.168.66.41:2380 listen-client-urls: https://192.168.66.41:2379 initial-advertise-peer-urls: https://192.168.66.41:2380 advertise-client-urls: https://192.168.66.41:2379

192.168.66.42

1 2 3 4 5 6 7 8 name: "etcd-2" listen-peer-urls: https://192.168.66.42:2380 listen-client-urls: https://192.168.66.42:2379 initial-advertise-peer-urls: https://192.168.66.42:2380 advertise-client-urls: https://192.168.66.42:2379

5.4 服务配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 cat > /usr/lib/systemd/system/etcd.service << EOF Description =Etcd Server After =network.target After =network-online.target Wants =network-online.target Type =notify ExecStart =/opt/etcd/bin/etcd --config-file /opt/etcd/cfg/etcd.yml Restart =on-failure LimitNOFILE =65536 WantedBy =multi-user.target

5.5 启动服务 5.6 集群状态查看 1 2 3 4 5 6 7 8 [root@k8s-master01 k8s] ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | https://192.168.66.31:2379 | 1f46bee47a4f04aa | 3.5.0 | 20 kB | false | false | 15 | 68 | 68 | | https://192.168.66.41:2379 | b3e5838df5f510 | 3.5.0 | 20 kB | false | false | 15 | 68 | 68 | | https://192.168.66.42:2379 | a437554da4f2a14c | 3.5.0 | 25 kB | true | false | 15 | 68 | 68 | |

六、部署k8s-master 6.1 安装kube-apiserver 6.1.1 配置文件 /opt/kubernetes/cfg/kube-apiserver.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 -- -- -- - -- - . . . , . . . , . . . -- - . . . -- - -- - . . . -- - -- - - - . . . -- - - , , , , -- - , -- - - - -- - - . -- - - - - -- - - . -- - - - . -- - - . -- - - - - . -- - - . -- - - . . . . -- - - - - - . -- - - - - . -- - . -- - . -- - - . -- - - -- - - -- - - -- - - -- - - - .

说明:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 -logtostderr:启用日志 log -dir :日志目录 cluster -ip-range :Service 虚拟 IP 地址段 bootstrap -token -auth:启用 TLS bootstrap 机制 token -auth-file :bootstrap token 文件 range :Service nodeport 类型默认分配端口范围 file :apiserver https 证书 log -xxx:审计日志

如果你未在运行 API 服务器的主机上运行 kube-proxy,则必须确保使用以下 kube-apiserver 标志启用系统:

1 --enable-aggregator-routing =true

6.1.2 kube-apiserver 服务配置 1 2 3 4 5 6 7 8 9 10 11 cat > /usr/ lib/systemd/ system/kube-apiserver.service << EOF //gi thub.com/kubernetes/ kubernetes /opt/ kubernetes/cfg/ kube-apiserver.conf /opt/ kubernetes/bin/ kube-apiserver \$KUBE_APISERVER_OPTS

6.1.3 设置开机启动 1 2 3 system ctl daemon-reload system ctl start kube-apiserver system ctl enable kube-apiserver

6.1.4 授权 kubelet-bootstrap 用户允许请求证书 1 2 3 kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole =system:node-bootstrapper \ --user =kubelet-bootstrap

6.2 部署 kube-controller-manager 6.2.1 配置文件 /opt/kubernetes/cfg/kube-controller-manager.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 KUBE_CONTROLLER_MANAGER_OPTS=2 \log -dir=/opt /kubernetes/logs \opt /kubernetes/cfg/kube-controller-manager.kubeconfig \127.0 .0.1 \10.244 .0.0 /16 \range =10.0 .0.0 /24 \file =/opt /kubernetes/ssl/ca .pem \file =/opt /kubernetes/ssl/ca -key.pem \ca -file =/opt /kubernetes/ssl/ca .pem \file =/opt /kubernetes/ssl/ca -key.pem \87600 h0m0s

参数

说明

–cluster-cidr string

集群中 Pod 的 CIDR 范围。要求 --allocate-node-cidrs 标志为 true。

–service-cluster-ip-range

集群中 Service 对象的 CIDR 范围。要求 --allocate-node-cidrs 标志为 true。

-v, –v int

日志级别详细程度取值。

–kubeconfig

Kubernetes 认证文件

–leader-elect

当该组件启动多个时,自动选举(HA)

–cluster-signing-cert-file –cluster-signing-key-file

自动为 kubelet 颁发证书的 CA,与 apiserver 保持一致

6.2.2 controller-manager 服务配置 /usr/lib/systemd/system/kube-controller-manager.service

1 2 3 4 5 6 7 8 9 [Unit] Description =Kubernetes Controller Manager Documentation =https://github.com/kubernetes/kubernetes [Service] EnvironmentFile =/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart =/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart =on -failure [Install] WantedBy =multi-user.target

6.2.3 启动并设置开机启动 1 2 3 system ctl daemon-reload system ctl enable kube-controller-manager --now

6.3 部署 kube-scheduler 6.3.1 配置文件 /opt/kubernetes/cfg/kube-scheduler.conf

1 2 3 4 5 6 -- -- -- - -- - . -- - -- - . . .

–master:通过本地非安全本地端口 8080 连接 apiserver。

–leader-elect:当该组件启动多个时,自动选举(HA)

6.3.2 服务配置 /usr/lib/systemd/system/kube-scheduler.service

1 2 3 4 5 6 7 8 9 [Unit] Description =Kubernetes Scheduler Documentation =https://github.com/kubernetes/kubernetes[Service] EnvironmentFile =/opt/kubernetes/cfg/kube-scheduler.conf ExecStart =/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart =on -failure [Install] WantedBy =multi-user.target

6.3.3 启动并设置开机启动 1 2 3 system ctl daemon-reload system ctl enable kube-scheduler --now

6.4 查看集群状态 1 2 3 4 5 6 7 8 [root@k8s-master01 ssl]# kubectl get csin v1.19+ERROR "health" :"true" ,"reason" :"" } "health" :"true" ,"reason" :"" } scheduler Healthy ok "health" :"true" ,"reason" :"" }

七、部署k8s-node 1 2 mkdir -p /opt/ kubernetes/{bin,cfg,ssl,logs}/opt/ kubernetes/bin

1 部署 kubelet 1.1 配置文件 /opt/kubernetes/cfg/kubelet.conf

1 2 3 4 5 6 7 8 9 KUBELET_OPTS="--v=2 \-hostname-override=k8s-master01 \ -kubeconfig=/opt/kubernetes /cfg/kubelet .kubeconfig \ -bootstrap-kubeconfig=/opt/kubernetes /cfg/bootstrap .kubeconfig \ -config=/opt/kubernetes /cfg/kubelet -config.yml \ -cert-dir=/opt/kubernetes /ssl \ -container-runtime-endpoint=unix:/ //run /containerd/containerd .sock \-node-labels=node.kubernetes.io/node='' \ -feature-gates=IPv6DualStack=true "

–hostname-override:显示名称,集群中唯一

–network-plugin:启用 CNI

–kubeconfig:空路径,会自动生成,后面用于连接 apiserver

–bootstrap-kubeconfig:首次启动向 apiserver 申请证书

–config:配置参数文件

–cert-dir:kubelet 证书生成目录

1.2 配置参数文件 /opt/kubernetes/cfg/kubelet-config.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0 .0 .0 port: 10250 serializeImagePulls: false readOnlyPort: 10255 cgroupDriver: systemd clusterDNS: - 10.0 .0 .2 clusterDomain: cluster.local failSwapOn: false authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15 % memory.available: 100Mi nodefs.available: 10 % nodefs.inodesFree: 5 % maxOpenFiles: 1000000 maxPods: 110 runtimeRequestTimeout: 15m0s

1.3 拷贝到配置文件bootstrap.kubeconfig 1 cp bootstrap.kubeconfig /opt/ kubernetes/cfg

1.4 systemd 管理 kubelet /usr/lib/systemd/system/kubelet.service

1 2 3 4 5 6 7 8 9 10 [Unit] Description =Kubernetes Kubelet After =docker.service [Service] EnvironmentFile =/opt/kubernetes/cfg/kubelet.confExecStart =/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart =on -failure LimitNOFILE =65536 [Install] WantedBy =multi-user.target

1.5 启动服务及开机设置 1 2 system ctl daemon-reload system ctl enable kubelet --now

1.6 批准 kubelet 证书申请并加入集群 1 2 3 4 5 6 7 8 9 10 11 node -csr-NrSNw-Gx8kR7VerABxUgHoM1mu71VbB8x598UXWOwM0 4m 12s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Pendingnode -csr-NrSNw-Gx8kR7VerABxUgHoM1mu71VbB8x598UXWOwM0 approvedVERSION <none> 19s v1.24.0

2 部署 kube-proxy 2.1 配置文件 /opt/kubernetes/cfg/kube-proxy.conf

1 2 KUBE_PROXY_OPTS ="--v=2 \ --config=/opt/kubernetes/cfg/kube-proxy-config.yml"

2.2 配置参数文件 /opt/kubernetes/cfg/kube-proxy-config.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0 .0 .0 metricsBindAddress: 0.0 .0 .0 :10249 clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig hostnameOverride: k8s-master01 clusterCIDR: 10.244 .0 .0 /16 mode: ipvs ipvs: scheduler: "rr" iptables: masqueradeAll: true

2.3 kube-proxy.kubeconfig 文件 1 cp kube-proxy.kubeconfig /opt/ kubernetes/cfg/

2.4 服务配置 /usr/lib/systemd/system/kube-proxy.service

1 2 3 4 5 6 7 8 9 10 [Unit] Description =Kubernetes Proxy After =network.target [Service] EnvironmentFile =/opt/kubernetes/cfg/kube-proxy.conf ExecStart =/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart =on -failure LimitNOFILE =65536 [Install] WantedBy =multi-user.target

2.5 启动并设置开机启动 1 2 3 system ctl daemon-reload system ctl enable kube-proxy --now

八、部署 CNI 网络 8.1 安装ipvs 1 2 3 4 5 6 7 8 9 10 11 12 yum install ipset ipvsadm -y /etc/ sysconfig/modules/i pvs.modules << EOF/bin/ bash/etc/ sysconfig/modules/i pvs.modules

8.2 部署calico 1 2 3 4 5 wget https ://docs.projectcalico.org/manifests/calico.yaml get pods -n kube-system get node

8.3 授权apiserver访问kubelet 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 cat > apiserver-to-kubelet-rbac.yaml << EOF apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:kube-apiserver-to-kubelet rules: - apiGroups: - "" resources: - nodes/proxy - nodes/stats - nodes/log - nodes/spec - nodes/metrics - pods/log verbs: - "*" --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:kube-apiserver namespace: "" roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:kube-apiserver-to-kubelet subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: kubernetes EOF kubectl apply -f apiserver-to-kubelet-rbac.yaml

8.4 部署nginx查看状态 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 kubectl create deploy nginx --image=harborcloud.com/library/nginx:1.7.9 cat >nginx-svc.yaml<<END apiVersion: v1 kind: Service metadata: name: web labels: name: nginx spec: type: NodePort ports: - port: 8088 targetPort: 80 nodePort: 30500 selector: app: nginx END kubectl create -f nginx-svc.yaml

网络转发规则查看:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@k8s-master01 k8s-install] 0 10m 1 0 0 1 5 0 1 0 0 1 0 0 1 0 0

十、新增加 Worker Node 1 拷贝已部署好的 Node 相关文件到新节点 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 @192 .168.66 .41 :/opt/kubernetes/bin/ @192 .168.66 .42 :/opt/kubernetes/bin/ @192 .168.66 .41 :/opt/kubernetes/ssl/ @192 .168.66 .42 :/opt/kubernetes/ssl/ @192 .168.66 .41 :/opt/kubernetes/cfg/ @192 .168.66 .42 :/opt/kubernetes/cfg/ @192 .168.66 .41 :/usr/lib/systemd/system/ @192 .168.66 .42 :/usr/lib/systemd/system/

2 修改主机名 k8s-node01:

1 2 3 4 /opt/ kubernetes/cfg/ kubelet.conf /opt/ kubernetes/cfg/ kube-proxy-config.yml

k8s-node02:

1 2 3 4 /opt/ kubernetes/cfg/ kubelet.conf /opt/ kubernetes/cfg/ kube-proxy-config.yml

3 ipvs开启 1 2 3 4 5 6 7 8 9 10 11 12 yum install ipset ipvsadm -y /etc/ sysconfig/modules/i pvs.modules << EOF/bin/ bash/etc/ sysconfig/modules/i pvs.modules

4 开启服务 1 2 3 systemctl daemon-reloadenable kubelet enable kube-proxy

5 在 Master 上批准新 Node kubelet 证书申请 1 2 kubectl get csrnode -csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro

十一 部署CoreDNS /opt/kubernetes/cfg/kube-apiserver.conf:

1 --service-cluster-ip-range =10.0 .0.0 /24

因为在kubelet-config.yml中指定

1 2 3 clusterDNS :- 10.0.0.2clusterDomain : cluster.local

安装

1 2 3 4 git clone https://gi thub.com/coredns/ deployment.git/deploy.sh -r 10.0.0.0/ 24 -i 10.0 .0.2 -d cluster.local > coredns.yaml

测试

1 2 kubectl create deployment nginx --image =nginx--port =80 --type =NodePort